Love it or hate it, AI is here and it’s finding its way into software all over the world. Regardless of your opinion on the current state of AI, here at Duende Software, we just want you to do all things software-related securely.

In relation to AI, developers are starting to explore scaling operational efficiency with AI agents. These AI Agents could be a powerful addition to an organization, but an LLM-only approach can be fraught with costly errors, misinformation, and hallucinations. Anything worth doing is worth doing right, but what does “right” currently look like? After all, developers want to deliver secure AI experiences, right?

In this post, we’ll discuss the Model Context Protocol, how developers can utilize the emerging protocol to deliver existing operational investments to a new audience, and, most importantly, how to securely deliver software-based value with industry best practices and spec-compliant implementations using Duende IdentityServer.

What is the Model Context Protocol (MCP)?

With the ubiquity of Large Language Models (LLMs), users have begun to realize that generalized training data can yield outcomes that fall short of expectations. LLMs operate in an iterative context enrichment loop, commonly expressed through an interactive chat session. The user types in a statement, and an LLM responds with an answer produced through a combination of training data and the current session context. It’s a natural interface for anyone who has grown up in the internet age.

While current training data sets can offer comprehensive knowledge, they lack the personalized data that makes our daily decisions impactful. What does my calendar look like? What is the exact code running my business process? How did my coworker respond to this email? These data points help inform us about what the next steps should be and how to approach a particular problem.

Initially, LLM companies believed that the quantity of data could overcome this issue, spending billions of dollars on scraping and training models. They have since realized that the quality of data is more crucial for producing better results.

In November 2024, Anthropic, the creator of Claude, introduced the Model Context Protocol (MCP) as a standardized protocol for AI applications to access data from otherwise inaccessible systems, including integrated development environments, development tools, databases, productivity tools, and other data-rich systems. Using an MCP, AI applications can request access to datasets, explore specialized tools and functions, and execute deterministic workflows. With MCP, you can introduce more high-value data into the session context, which can improve responses and lead to better outcomes. No longer is data being generated or potentially hallucinated; instead, it is a direct result of the interaction between the AI tool and the MCP servers. Verified inputs enhance the trust and reliability of systems that could become mission-critical.

Why should developers care about MCP?

Duende IdentityServer 7.4 implements RFC 8414, OAuth 2.0 Authorization Server Metadata, enabling AI workflows to enhance their security. Check it out now!

While LLM-powered applications can provide a natural-feeling experience to users, users crave something else, almost more crucial: correctness. Known inputs and reproducible outputs are cornerstones of operational success in the world’s leading industries. It’s not uncommon for organizations to have invested decades in developing systems with predictable outcomes. It’s business as usual.

What’s not business as usual is the introduction of AI agents into critical systems. Hallucinations are a common problem with LLMs, and may not be a solvable problem. Introducing AI agents without guardrails, acting on potentially incorrect information, could be catastrophic for many organizations. The question of whether AI agents can be helpful is a crucial organizational question that many teams will need to ask themselves. However, knowing that LLM-powered solutions have inherent issues, how can developers help mitigate these potential problems?

As mentioned previously, developers know how to problem-solve; in fact, it’s a core job competency to see a problem and provide a solution. Developers deliver these solutions in the form of code, APIs, databases, and applications.

MCPs allow developers to inject a dose of determinism and accuracy into the current LLM session, from trusted and vetted systems. An MCP implementation reduces the chance of an LLM hallucinating mission-critical data, and more importantly, the user seeing and misinterpreting non-factual information - solving for both quality and correctness.

Please note that current best practices regarding AI agents still strongly recommend human oversight, often referred to as “Human in the Loop,” to prevent unforeseen outcomes. But for developers tasked with introducing AI agents, providing an MCP solution to existing systems means that LLM systems can utilize MCPs to fill informational gaps, rather than generating those gaps with non-contextual and generalized training data.

So, what are these systems?

Maximizing AI Investments with Pre-existing Solutions

Most organizations have at least a decade of software development under their belt, with some even approaching the 70-year mark. OpenAI, arguably the start of the commercial AI era, has only existed for the last 9 years. While AI vendors market it as revolutionary, the technologies on which AI relies are mainstays of the tech industry. For the AI era to achieve prolonged success, it will require a complementary approach to protocols and systems that have been built over decades by developers and specialists. It will also rely on proprietary solutions built within organizations to reach its lofty ROI expectations. In short, for AI to work in your organization, success builds on the hard work and investments you’ve already made. What are those investments?

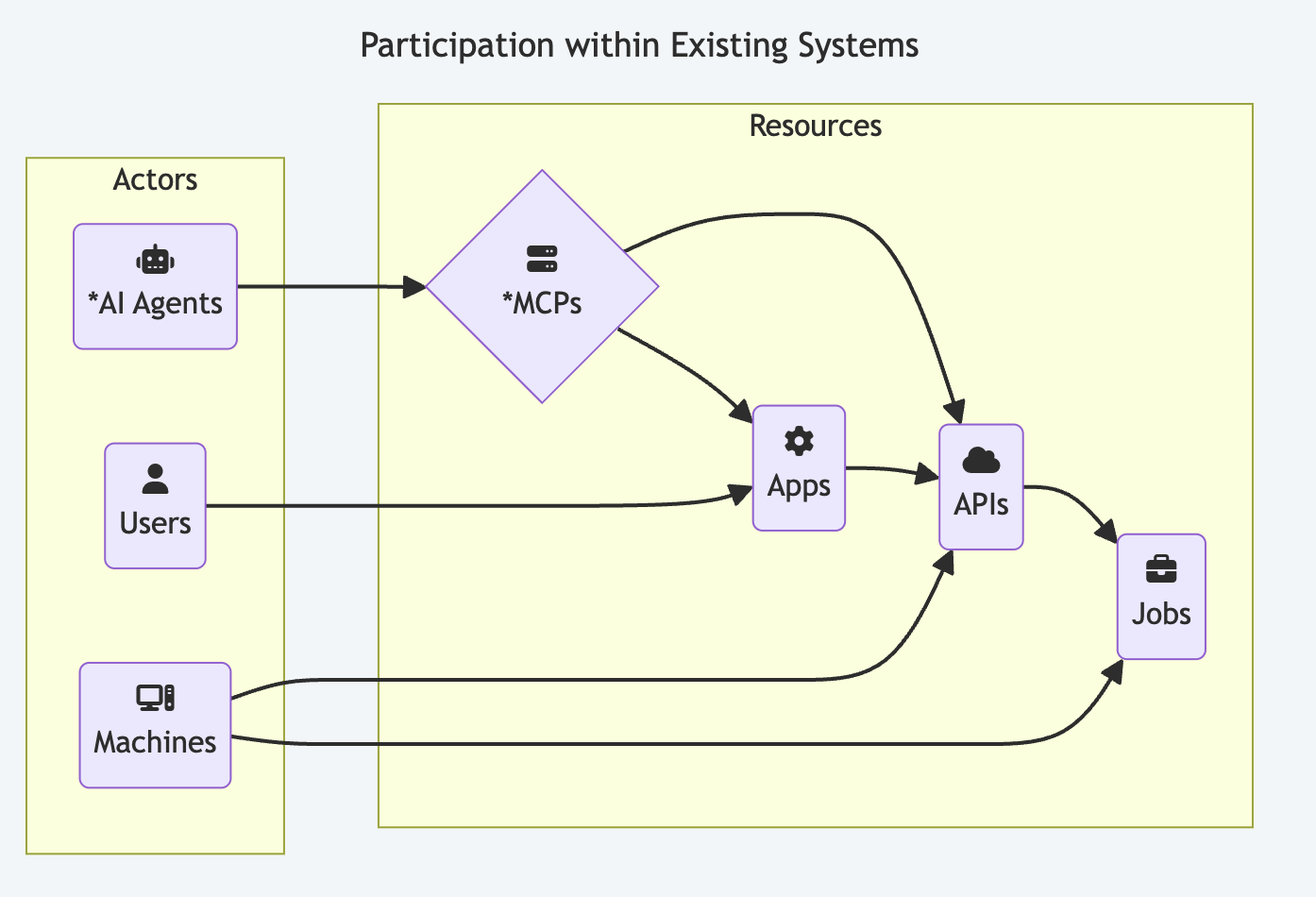

Investments that organizations may have made include building private and public APIs, designing and maintaining existing databases, long-lived workflows, and administrative tools. This infrastructure has focused on enabling human users through user interfaces and automating machine-based tasks, either with time-based events or business events. Now, MCP allows AI agents to integrate seamlessly into the current work context with minimal investment in exposing existing functionality. It’s another entry point.

This image shows the logical view of actors interacting with organizational resources in production environments. AI Agents and MCPs are new elements, but they also reflect an addition to existing systems, rather than a replacement of them.

Exposing established systems through an MCP allows developers to explore new user experiences. Some software providers have begun providing MCP implementations for their services. You could use them, but in the following section, we’ll outline why we think building your own MCP might be the better option.

MCP Architecture, Design, and Solution Planning

So, you’ve decided to build your own MCP servers. The good news is that it’s pretty straightforward and utilizes many of the existing infrastructures you likely already have within your organization. From servers, APIs, and Identity Providers (IdPs), like Duende IdentityServer, you likely have everything you need to get started.

At Duende, security is of utmost importance. Before exposing mission-sensitive data and functions to AI agents, consider securing access to existing functionality using current best practices and security protocols, such as OpenID Connect and OAuth.

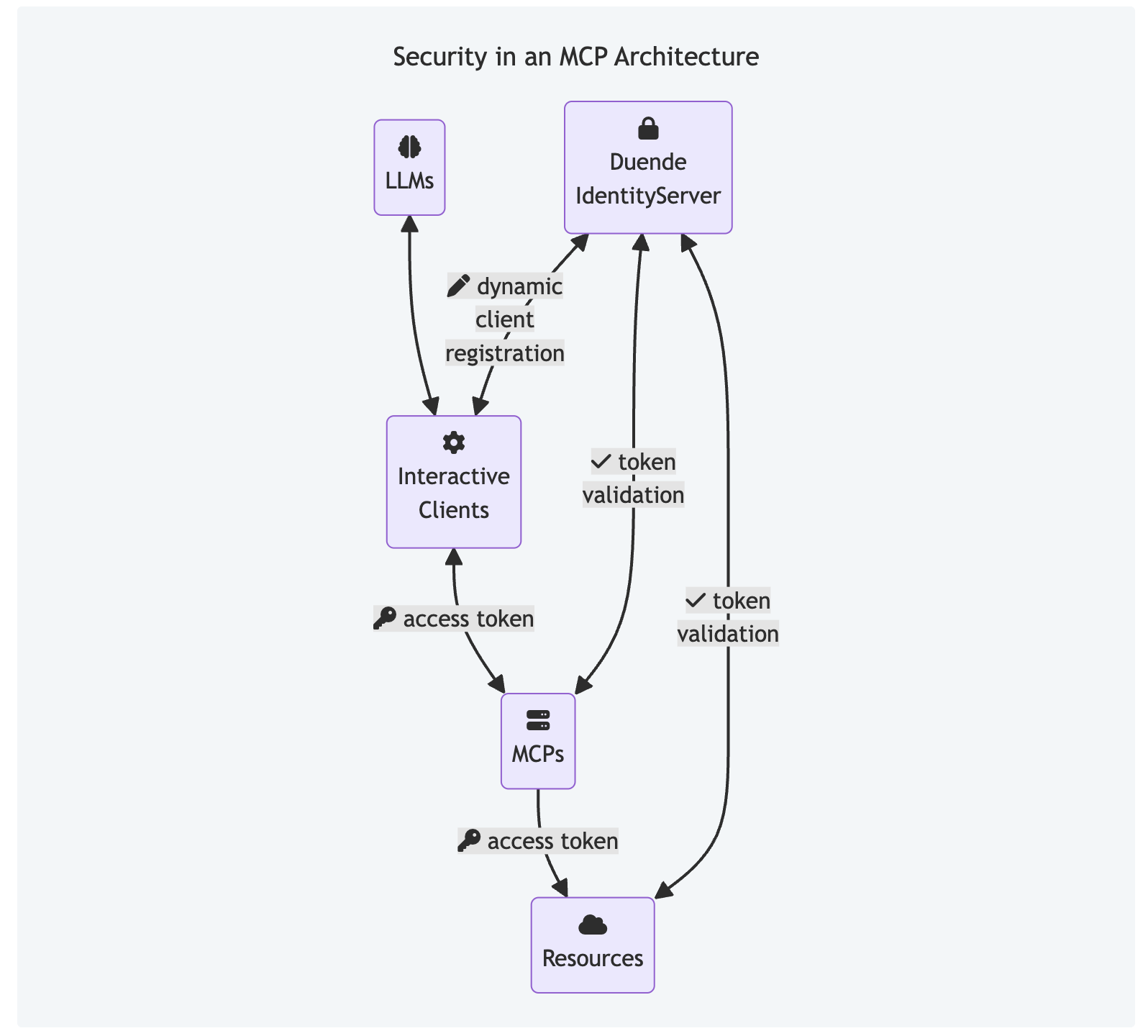

When introducing MCPs and AI Agents into your environment, consider the four critical logical elements of your architecture: the interactive client, the MCP server, the resources, and the security system that enables secure communication between all parties.

Let’s see what that might look like conceptually and what operations may occur between each party.

This image shows an architecture where clients, MCPs, and resources may need to communicate securely. Duende IdentityServer, as an identity provider, introduces industry-recognized security using OAuth, with client registration and access tokens.

While the diagram illustrates parties in a solution, what might be some concrete examples of each element?

Interactive Clients

For interactive clients, these typically include user-facing applications such as word processors, chat clients, web applications, or native desktop applications. These tools often serve as the interaction point between a human, an LLM provider, and one or more MCP servers. Note that clients are the glue that connects MCPs to LLMs.

In practice, the client serves as a proxy, enabling LLMs to access MCP functionality while allowing the client to execute those tools, thereby bringing the results of the tools into the session context. At no point does an LLM provider have direct execution privileges to your secure environments, but interactive clients do.

MCP and the Servers That Host Them

From a technical perspective, MCPs serve as the gateway to your organization’s world of APIs, data, and operational systems. In fact, the MCP official site likes to think of the protocol as “a USB-C port for AI applications.” In this case, you have two options when working with MCPs: use a service-provided MCP or build your own.

Suppose your organization utilizes third-party services, such as Microsoft Office 365, GitHub, Google Workspace, Slack, Figma, or similar offerings. In that case, you likely already have access to a world of prebuilt MCP implementations. These MCPs expose specific tools and data from these services, and they may already be sufficient to gain insight into the power the protocol can provide to existing AI workflows. There are also MCP implementations for low-level or local resources, such as PostgreSQL databases, Git, and File system access, among others.

When building your own MCPs, consider what LLMs you will be using in your workflows. While LLMs are becoming increasingly capable in their ability to perform tasks, they still have several limitations, including speed, token limits, accuracy, and reasoning capabilities.

Building a custom MCP enables you, the software developer, to encapsulate as much behavior as possible behind an MCP’s tool or data point, thereby limiting the likelihood of unexpected or undesirable interactions between tools as a session continues. Another added benefit of building your own MCP implementation is that you can enforce your own set of safeguards tailored to your specific requirements. Integration with an IdP like Duende IdentityServer can allow you to centralize security, rather than relying on each third-party service’s security practices. Building your own MCP is a low-effort task that can yield rewarding outcomes.

Resources

Resources are the systems you already have within your organization. These resources include APIs, databases, third-party services, scripts, and recurring jobs. The essential consideration with resources is that all clients, whether they are humans, machines, or AI agents, access them securely using current industry best practices. In the case of most modern systems, developers built their security on the standards of OAuth and OpenID Connect. Just because something's new doesn’t mean we should abandon our security posture. In our opinion, at Duende, high security hygiene is never a matter for compromise.

Architectural Choices

Now that you understand all the elements, you may want to consider the various choices you’ll need to make to ensure a successful implementation. We’ve provided a set of questions below to ask yourself or within your organization:

What types of clients will interact with LLMs and MCPs?

- Do I want to build custom UIs or use existing interactive clients?

- Who is using these clients, and what are they trying to accomplish?

Which MCP do I need to get a working solution?

- Do I want to use a third-party MCP?

- Do I want to build my own MCP?

What are the most essential resources to securely expose?

- Are they APIs?

- Are they databases?

- Are they Jobs and Services?

How will I supervise AI agents?

- What parts of the process need human involvement?

- How do I verify results?

- How well do I understand and plan for “worst case” scenarios?

How do I manage security?

- How do I register and manage clients (interactive clients, MCPs, and Resources)?

- How do elements communicate securely with each other?

Asking yourself these questions can help you determine if you’re ready to take the next step and start building your own MCP.

In the following sections, we’ll show you how to use Duende IdentityServer’s Dynamic Client Registration to register interactive clients securely, how to build a custom .NET MCP server with the MCP C# Toolkit, and how an interactive client might call your new MCP’s tools.

Enabling Dynamic Client Registration in Duende IdentityServer

Interactive clients are the interface through which human users will interact with your new AI investments. Additionally, you can think of AI agents as self-contained interactive clients. Both interactive clients and AI agents must register with your IdP to operate within your security context.

While securing AI workloads is a rapidly evolving space, as of the time of writing this article, the AI industry is attempting to utilize Dynamic Client Registration (DCR) as a means to enable these interactive clients to introduce themselves and register with a specific identity provider, delegating a user’s permissions to the tasks at hand.

In this section, we’ll show how you can enable Duende IdentityServer’s DCR capabilities to allow for self-registration. Note that DCR is a Duende IdentityServer feature, available only to Business and Enterprise license holders. To continue, you will need an instance of Duende IdentityServer, which you can create using our Duende Templates.

You can create the following code by starting with the Duende IdentityServer In-Memory Stores and Test Users (duende-is-inmem) template. Note that in a production scenario, you’ll want to move from an in-memory option to persistent storage, but we’ll keep this demo code as simple as possible.

From your favorite developer tool, start by generating a new IdentityServer instance from the duende-is-inmem template. We’ll be making several updates to configure your instance for MCP support. Let’s look at the changes required on a file-by-file basis.

In the .csproj file of your IdentityServer host project, we need to make two small changes:

- Upgrade to the latest version of

Duende.IdentityServerNuGet package to version 7.4, as it includes enhancements for MCP scenarios. - Add another reference to the

Duende.IdentityServer.ConfigurationNuGet package targeting the same version as theDuende.IdentityServerpackage.

Once you have made these updates to your csproj file, you should have the following PackageReference elements:

<Project Sdk="Microsoft.NET.Sdk.Web">

<PropertyGroup>

<TargetFramework>net10.0</TargetFramework>

<ImplicitUsings>enable</ImplicitUsings>

<Nullable>enable</Nullable>

</PropertyGroup>

<ItemGroup>

<PackageReference Include="Duende.IdentityServer" version="7.4.0" />

<PackageReference Include="Duende.IdentityServer.Configuration" Version="7.4.0" />

<PackageReference Include="Serilog.AspNetCore" Version="8.0.3"/>

</ItemGroup>

</Project>

Next, you’ll need to configure IdentityServer for scopes and API resources that the MCP server will reference, which you’ll build later in this tutorial. Make these changes in the Config.cs file:

- Remove the

Clientsproperty as there will be no static clients in this tutorial. - Update the

ApiScopesproperty to support the scope used by the MCP server. - Add an

ApiResourcesproperty along with an API resource to describe the MCP API protected by this instance of IdentityServer.

The result of the Config.cs file looks like this:

public static class Config

{

public static IEnumerable<IdentityResource> IdentityResources =>

[

new IdentityResources.OpenId(),

new IdentityResources.Profile()

];

public static IEnumerable<ApiResource> ApiResources =>

[

new("https://localhost:7141/", "MCP Server")

{

Scopes = { "mcp:tools" }

}

];

public static IEnumerable<ApiScope> ApiScopes =>

[

new("mcp:tools")

];

}

You will use the scopes and API resource values shortly to support the MCP server portion of the demo.

Finally, you are required to change a few lines in the HostingExtensions.cs file. The first change needed is inside the configuration block, specifically in the call to AddIdentityServer. Inside that block, add the following line to add an entry for the Dynamic Client Registration endpoint into the Authorization Server Metadata:

// this will add the default dynamic client registration endpoint to the discovery/metadatada documents

options.Discovery.DynamicClientRegistration.RegistrationEndpointMode = RegistrationEndpointMode.Inferred;

Enabling the additional option will instruct the client on how to request registration as a client with IdentityServer.

Next, adjust the section of the ConfigureServices method concerned with configuring the resources protected by this instance of IdentityServer by finding the line containing the call to AddInMemoryClients and replacing it with the following lines:

isBuilder.AddInMemoryClients([]);

isBuilder.AddInMemoryApiResources(Config.ApiResources);

The lines of code will register an in-memory version of the client store and configure the API resource that represents the MCP instance protected by IdentityServer.

After that code, add the following block to register the necessary components to support Dynamic Client Registration:

builder.Services.AddIdentityServerConfiguration(_ => { })

// in memory is being used here to keep the demo simple. in a real scenario, a persistent storage

// mechanism is needed for client registrations to persist across application restarts

.AddInMemoryClientConfigurationStore();

Finally, add a call to app.MapDynamicClientRegistration in the ConfigurePipeline method, right before the app returns the variable.

With that, you should now have configured IdentityServer appropriately to allow Dynamic Client Registration and protect the MCP instance in this demo.

The complete ConfigureServices method should now look like this:

public static WebApplication ConfigureServices(this WebApplicationBuilder builder)

{

builder.Services.AddRazorPages();

var isBuilder = builder.Services.AddIdentityServer(options =>

{

// this will add the default dynamic client registration endpoint to the discovery/metadatada documents

options.Discovery.DynamicClientRegistration.RegistrationEndpointMode = RegistrationEndpointMode.Inferred;

options.Events.RaiseErrorEvents = true;

options.Events.RaiseInformationEvents = true;

options.Events.RaiseFailureEvents = true;

options.Events.RaiseSuccessEvents = true;

// Use a large chunk size for diagnostic logs in development where it will be redirected to a local file

if (builder.Environment.IsDevelopment())

{

options.Diagnostics.ChunkSize = 1024 * 1024 * 10; // 10 MB

}

})

.AddTestUsers(TestUsers.Users)

.AddLicenseSummary();

// in-memory, code config

isBuilder.AddInMemoryIdentityResources(Config.IdentityResources);

isBuilder.AddInMemoryApiScopes(Config.ApiScopes);

// since this will use DCR, we do not need any pre-configured clients

isBuilder.AddInMemoryClients([]);

isBuilder.AddInMemoryApiResources(Config.ApiResources);

builder.Services.AddIdentityServerConfiguration(_ => { })

// in memory is being used here to keep the demo simple. in a real scenario, a persistent storage

// mechanism is needed for client registrations to persist across application restarts

.AddInMemoryClientConfigurationStore();

builder.Services.TryAddTransient<IDynamicClientRegistrationValidator, CustomDynamicClientRegistrationValidator>();

builder.Services.AddAuthentication()

.AddOpenIdConnect("oidc", "Sign-in with demo.duendesoftware.com", options =>

{

options.SignInScheme = IdentityServerConstants.ExternalCookieAuthenticationScheme;

options.SignOutScheme = IdentityServerConstants.SignoutScheme;

options.SaveTokens = true;

options.Authority = "https://demo.duendesoftware.com";

options.ClientId = "interactive.confidential";

options.ClientSecret = "secret";

options.ResponseType = "code";

options.TokenValidationParameters = new TokenValidationParameters

{

NameClaimType = "name",

RoleClaimType = "role"

};

});

return builder.Build();

}

Building a Secure Server with MCP C# SDK

Now that you’ve configured Duende IdentityServer with Dynamic Client Registration, it can start supporting AI Clients and the MCP Servers that they will inevitably call. But first, let’s build a custom MCP server that encapsulates a weather API to be used by our AI clients.

In your favorite developer tool, start by creating an empty web application using the .NET templates provided by the .NET SDK. Once created, add the following packages to the newly created project:

ModelContextProtocol.AspNetCore- The package provides the building blocks for creating an MCP server.

Microsoft.AspNetCore.Authentication.JwtBearer- The package adds the functionality for validating the tokens created by IdentityServer and sent by the client in its requests to the secure MCP server.

The code in the MCP server should feel familiar to any .NET developer who has previously created an API. The differences are in the code specific to the MCP. Let’s examine the setup and highlight the differences.

Let’s start in the Program.cs file by configuring our ASP.NET Core host. Anyone who has secured an API with JWT Bearer authentication in the past should find the following code familiar:

using System.Diagnostics;

using System.Net;

using System.Text;

using System.Web;

using Microsoft.Extensions.Logging;

using ModelContextProtocol.Authentication;

using ModelContextProtocol.Client;

using ModelContextProtocol.Protocol;

var builder = WebApplication.CreateBuilder(args);

var serverUrl = "https://localhost:7141";

var inMemoryOAuthServerUrl = "https://localhost:5001/";

builder.Services.AddAuthentication(options =>

{

options.DefaultChallengeScheme = McpAuthenticationDefaults.AuthenticationScheme;

options.DefaultAuthenticateScheme = JwtBearerDefaults.AuthenticationScheme;

})

.AddJwtBearer(options =>

{

options.Authority = inMemoryOAuthServerUrl;

options.TokenValidationParameters = new TokenValidationParameters

{

ValidateIssuerSigningKey = true,

ValidAudience = serverUrl,

ValidIssuer = inMemoryOAuthServerUrl,

NameClaimType = "name",

RoleClaimType = "role"

};

})

.AddMcp(options =>

{

options.ResourceMetadata = new()

{

Resource = new Uri(serverUrl),

ResourceDocumentation = new Uri("https://docs.example.come/api/weather"),

AuthorizationServers = { new Uri(inMemoryOAuthServerUrl) },

ScopesSupported = ["mcp:tools"]

};

});

builder.Services.AddAuthorization();

We first set the DefaultChallengeScheme to McpAuthenticationDefaults.AuthenticationScheme to configure the authentication pipeline to use the appropriate authentication handler for MCP when a client attempts to access a protected resource.

We then follow up with a call and the chained call to AddMcp. The options in the call to AddMcp set the values for the protected resource metadata. The MCP Server exposes the metadata in an endpoint and, as the name implies, describes the protected resource. A client attempting to interact with the MCP server can call the metadata endpoint to determine which authorization server(s) it should interact with and which scopes it will need to request to interact with the MCP server. With that information, a client can make a dynamic client registration request to the appropriate authorization server and subsequently obtain an access token, which it can use to interact with the MCP server.

The rest of the Program.cs file contains a couple more points of interest:

builder.Services.AddHttpContextAccessor();

builder.Services.AddMcpServer()

.WithTools<WeatherTools>()

.WithHttpTransport();

builder.Services.AddHttpClient("WeatherApi", client =>

{

client.BaseAddress = new Uri("https://api.weather.gov");

client.DefaultRequestHeaders.UserAgent.Add(new ProductInfoHeaderValue("weather-tool", "1.0"));

});

var app = builder.Build();

app.UseAuthentication();

app.UseAuthorization();

app.MapMcp().RequireAuthorization();

app.Run();

Within what is otherwise fairly standard code for a .NET API, let’s look closer at the two MCP-specific bits from the preceding code block.

The call to builder.Services.AddMcpServer adds the required components for our host, allowing our application to function as an MCP server. The chained WithTools call registers the implemented tools that our MCP server will expose. The call to WithHttpTransport registers the necessary services for our MCP server to communicate with the MCP over the HTTP transport.

Finally, before the call to app.Run, there is a call to app.MapMcp().RequireAuthorization() to create the endpoints necessary for the MCP server and enforce authentication and authorization on all MCP endpoints.

Client Access To MCP Server Tools

Let’s talk about clients again, because this may be the most crucial element of your AI workflow.

Currently, there are many options for client tools. Between native applications that work in a user’s local environment, a hosted option shared by an organization, or agent-based tools running in a data center, each is an additional client within your security context. What may have started as a simple architecture before the introduction of AI has now expanded to include new security considerations and implications for your solution.

As it relates to your IdP, more specifically, Duende IdentityServer, it could mean an increase in the number of unique client identifiers in your solution. For example, a team of developers using a code generation tool such as Claude Code with VS Code may have to register 1 to 2 clients per team member. In a team of 10 developers, that’s an additional 20 clients. That may be worth the increased operational and cognitive cost for some organizations, but it may give others pause.

For teams seeking to integrate AI into their workflows with a more secure and effective operational model, consider developing custom clients that can operate in shared environments. Good examples include GitHub Copilot reviewing pull requests. These tools operate as a single client, but produce valuable output that everyone in a team can assess and reason about, and have resulting artifacts persist in a shared space. These tools act on behalf of your organization but aren’t directly accessible to users.

Another option is to build a shared UI, such as a chat interface, where interactions between a client and your MCP servers are more predictable. These UIs can be secured using OpenID Connect, but the client relationship stays static, similar to a web application or mobile application. The approach still provides the value of AI and MCP, but without the need for Dynamic Client Registration. Admittedly, this may require more custom development and has limited access to environments.

As mentioned previously, the security surrounding MCPs and AI workflows remains a topic of ongoing discussion. There are still many unanswered questions regarding client access, and the situation may change by the time this article is published. MCPs will likely still exist, but the way clients and servers interact and secure themselves may change. Additionally, as these clients improve their security hygiene, we can expect to see them utilize more advanced security features, such as dynamic proof of possession (DPoP) and other enterprise-level security measures. In short, keep an eye out for evolving developments.

In general terms, teams will likely have a mixture of off-the-shelf clients and custom clients, running across several environments, from local to production. AI adoption dramatically increases the security surface of an organization, and careful consideration must be given to when and where to apply AI alongside MCP services. Securing your clients is an imperative for any organization looking to adopt AI.

Putting It All Together

Now that you’ve configured Duende IdentityServer with DCR and have a working MCP server, you need to register a client and call our protected resource. For this tutorial, we have built a custom client using C# and .NET; however, in most cases, you’ll use a tool that supports MCP, such as VS Code, JetBrains IDEs, or various terminal applications. Refer to the documentation of your specific tool to determine if it supports DCR. You can find the client code in the sample, but for this tutorial, screenshots will suffice.

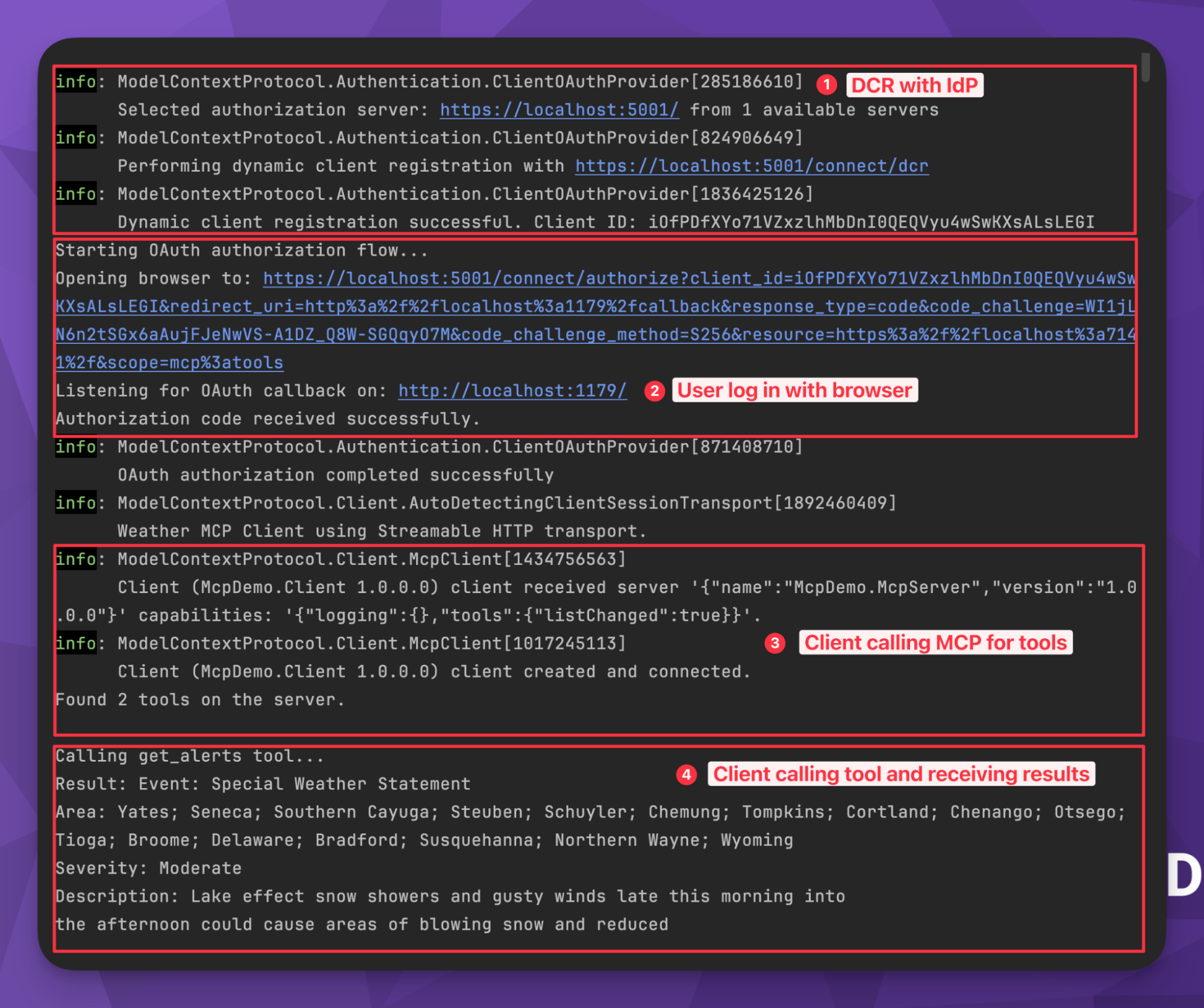

Running our client, you’ll see the result from our protected resource, a weather API.

You’ll see four distinct steps in the execution’s output:

- The custom client registration with our Duende IdentityServer instance uses dynamic client registration. After successfully registering, our client receives a generated client ID.

- Once registered as a public client, the process issues a request for the user to authenticate with a browser. Once authenticated, the received token enables the client to obtain the access token necessary to access protected resources.

- Now that the client is registered and the user authenticated, we make a call to the MCP to retrieve the available tools. In our case, it’s a weather alerts endpoint.

- We issue a call through the client to our API using the user’s access token, receiving the final results securely.

It’s as simple as that!

A consideration when working with clients is how clients will store their configuration and sensitive information, such as client identifiers, refresh tokens, and access tokens. While those considerations are outside the scope of this tutorial, it should be the top priority for anyone building their own clients or asking questions when adopting someone else’s client implementation.

Client ID Metadata Document Authentication Flow and the Future

While this article has discussed Dynamic Client Registration, new discussions are emerging at the time of writing around a new authentication flow for interactive clients, known as the OAuth Client ID Metadata Document.

The draft document specifies a mechanism in which a client can identify itself to an authorization server without dynamic client registration. This identification means the client must use a URL as its client identifier, with the URL linking to a JSON document hosted at that destination.

{

"client_id": "https://app.example/client_id.json",

"client_name": "Application Name",

"redirect_uris": ["https://app.example/callback"],

"post_logout_redirect_uris": ["https://app.example/logout"],

"client_uri": "https://app.example/",

"logo_uri" : "https://app.example/logo.png",

"tos_uri" : "https://app.example/tos.html",

"scope" : "openid profile offline_access webid",

"grant_types" : ["refresh_token","authorization_code"],

"response_types" : ["code"]

}

The JSON document is a manifest, containing the information an authorization server, such as Duende IdentityServer, would need to register the client automatically. This approach centralizes the registration process, putting more emphasis on the authorization server’s ability to distinguish between known and unknown clients. In practice, Duende IdentityServer users can implement a mechanism as simple as an allow list or a more complex vetting process that approves clients based on various criteria. Luckily, customization is a key feature that Duende IdentityServer offers developers.

While not officially supported yet, at Duende, our goal is always to be “spec-compliant”, and we are currently investigating this RFC for use cases.

Conclusion

The integration of AI agents into organizational workflows presents both significant opportunities and considerable challenges, particularly in terms of security and data integrity. The Model Context Protocol offers a powerful solution by enabling AI applications to securely access an organization's pre-existing, high-value data and operational systems. This approach mitigates the risks associated with LLM hallucinations and leverages decades of investment in reliable software. As you’ve seen, it is straightforward with Duende IdentityServer and dynamic client registration.

As we've discussed, building your own MCP servers and integrating them with a robust Identity Provider, such as Duende IdentityServer, provides a secure and deterministic way to introduce AI agents into an existing organization. By utilizing industry best practices such as OAuth and OpenID Connect, and enabling features like Dynamic Client Registration, organizations can ensure that all interactive clients and AI agents operate within a well-defined security context. All, while taking advantage of organizational investments in applications, APIs, and security.

The architectural considerations and questions outlined in this document are crucial for successful implementation. By thoughtfully planning and leveraging Duende IdentityServer, developers can create secure, reliable, and highly effective AI-powered solutions that build upon existing infrastructure, rather than replacing it. The future of AI in the enterprise relies on safe, well-governed access to information, and with MCP and Duende IdentityServer, that future is within reach.